Guaranteeing throughput with QoS

You can use storage quality of service (QoS) to guarantee that performance of critical workloads is not degraded by competing workloads. You can set a throughput ceiling on a competing workload to limit its impact on system resources, or set a throughput floor for a critical workload, ensuring that it meets minimum throughput targets, regardless of demand by competing workloads. You can even set a ceiling and floor for the same workload.

Understanding throughput ceilings (QoS Max)

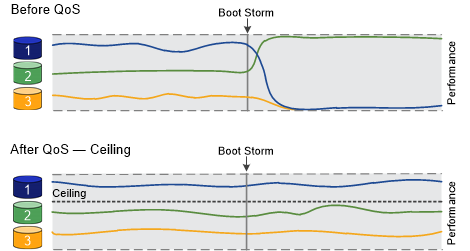

A throughput ceiling limits throughput for a workload to a maximum number of IOPS or MBps, or IOPS and MBps. In the figure below, the throughput ceiling for workload 2 ensures that it does not bully

workloads 1 and 3.

A policy group defines the throughput ceiling for one or more workloads. A workload represents the I/O operations for a storage object: a volume, file, qtree or LUN, or all the volumes, files, qtrees, or LUNs in an SVM. You can specify the ceiling when you create the policy group, or you can wait until after you monitor workloads to specify it.

Understanding throughput floors (QoS Min)

A throughput floor guarantees that throughput for a workload does not fall below a minimum number of IOPS or MBps, or IOPS and MBps. In the figure below, the throughput floors for workload 1 and workload 3 ensure that they meet minimum throughput targets, regardless of demand by workload 2.

A policy group that defines a throughput floor cannot be applied to an SVM. You can specify the floor when you create the policy group, or you can wait until after you monitor workloads to specify it.

A workload might fall below the specified floor during critical operations like volume move trigger-cutover. Even when sufficient capacity is available and critical operations are not taking place, throughput to a workload might fall below the specified floor by up to 5%. If floors are overprovisioned and there is no performance capacity, some workloads might fall below the specified floor.

Understanding shared and non-shared QoS policy groups

Starting with ONTAP 9.5, you can use a non-shared QoS policy group to specify that the defined throughput ceiling or floor applies to each member workload individually. Behavior of shared policy groups depends on the policy type:

For throughput ceilings, the total throughput for the workloads assigned to the shared policy group cannot exceed the specified ceiling.

For throughput floors, the shared policy group can be applied to a single workload only.

Understanding adaptive QoS

Ordinarily, the value of the policy group you assign to a storage object is fixed. You need to change the value manually when the size of the storage object changes. An increase in the amount of space used on a volume, for example, usually requires a corresponding increase in the throughput ceiling specified for the volume.

Adaptive QoS automatically scales the policy group value to workload size, maintaining the ratio of IOPS to TBs|GBs as the size of the workload changes. That is a significant advantage when you are managing hundreds or thousands of workloads in a large deployment.

Starting with ONTAP 9.5, you can specify an I/O block size for your application that enables a throughput limit to be expressed in both IOPS and MBps. The MB/s limit is calculated from the block size multiplied by the IOPS limit. For example, an I/O block size of 32K for an IOPS limit of 6144IOPS/TB yields an MBps limit of 192 MBps.

You can expect the following behavior for both throughput ceilings and floors:

When a workload is assigned to an adaptive QoS policy group, the ceiling or floor is updated immediately.

When a workload in an adaptive QoS policy group is resized, the ceiling or floor is updated in approximately five minutes.

Throughput must increase by at least 10 IOPS before updates take place.

Adaptive QoS policy groups are always non-shared: the defined throughput ceiling or floor applies to each member workload individually.

General support

The following table shows the differences in support for throughput ceilings, throughput floors, and adaptive QoS.

Resource or feature | Throughput ceiling | Throughput floor | Throughput floor v2 | Adaptive QoS |

|---|---|---|---|---|

| ONTAP 9 version | All | 9.5 and later | 9.7 and later | 9.5 and later |

| Platforms | All |

|

| All |

| Protocols | All | All | All | All |

| FabricPool | Yes | Yes, if the tiering policy is set to noneand no blocks are in the cloud. | Yes, if the tiering policy is set to noneand no blocks are in the cloud. | Yes |

| SnapMirror Synchronous | Yes | No | No | Yes |

Supported workloads for throughput ceilings

The following table shows workload support for throughput ceilings by ONTAP 9 version. Root volumes, load-sharing mirrors, and data protection mirrors are not supported.

Workload support - ceiling | 9.5 and later | 9.8 and later |

|---|---|---|

| Volume | yes | yes |

| File | yes | yes |

| LUN | yes | yes |

| SVM | yes | yes |

| FlexGroup volume | yes | yes |

| Multiple workloads per policy group | yes | yes |

| Non-shared policy groups | yes | yes |

Supported workloads for throughput floors

The following table shows workload support for throughput floors by ONTAP 9 version. Root volumes, load-sharing mirrors, and data protection mirrors are not supported.

Workload support - floor | 9.5 and later | 9.8 and later |

|---|---|---|

| Volume | yes | yes |

| File | yes | yes |

| LUN | yes | yes |

| SVM | no | yes |

| FlexGroup volume | yes | yes |

| Multiple workloads per policy group | yes | yes |

| Non-shared policy groups | yes | yes |

*Starting from ONTAP 9.8, NFS access is supported in qtrees in FlexVol and FlexGroup volumes with NFS enabled. Starting from ONTAP 9.9.1, SMB access is also supported in qtrees in FlexVol and FlexGroup volumes with SMB enabled.

Supported workloads for adaptive QoS

The following table shows workload support for adaptive QoS by ONTAP 9 version. Root volumes, load-sharing mirrors, and data protection mirrors are not supported.

Workload support - adaptive QoS | 9.5 and later |

|---|---|

| Volume | yes |

| File | yes |

| LUN | yes |

| SVM | no |

| FlexGroup volume | yes |

| Multiple workloads per policy group | yes |

| Non-shared policy groups | yes |

Maximum number of workloads and policy groups

The following table shows the maximum number of workloads and policy groups by ONTAP 9 version.

Workload support | 9.5 and later |

|---|---|

| Maximum workloads per cluster | 40,000 |

| Maximum workloads per node | 40,000 |

| Maximum policy groups | 12,000 |