PCIe slots and configurations

Slot configurations

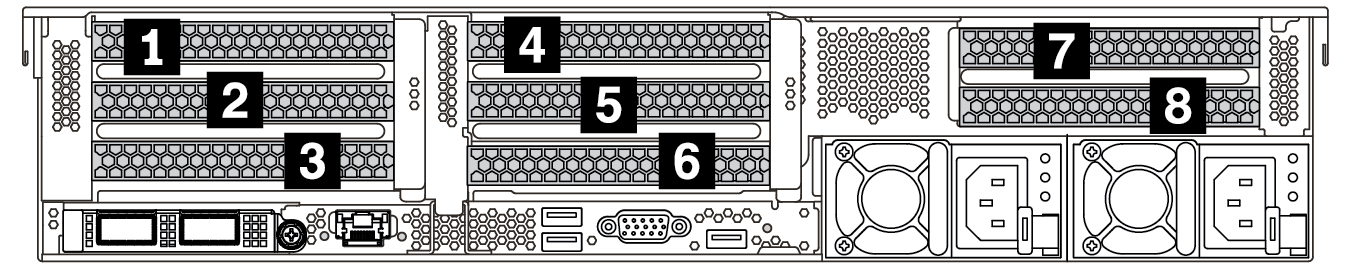

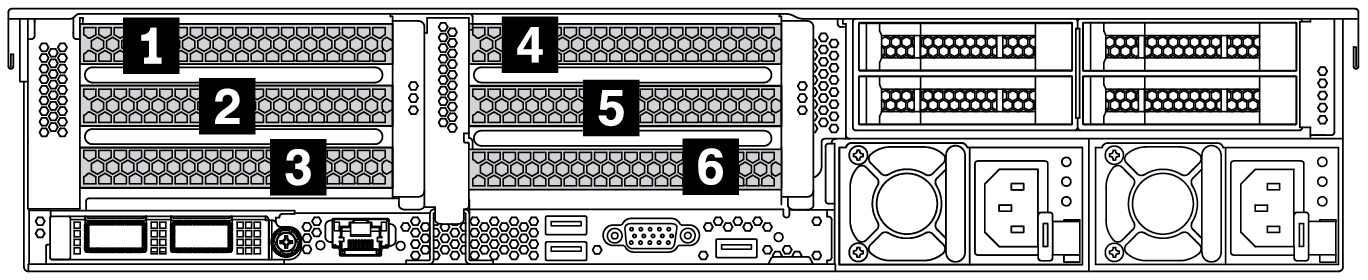

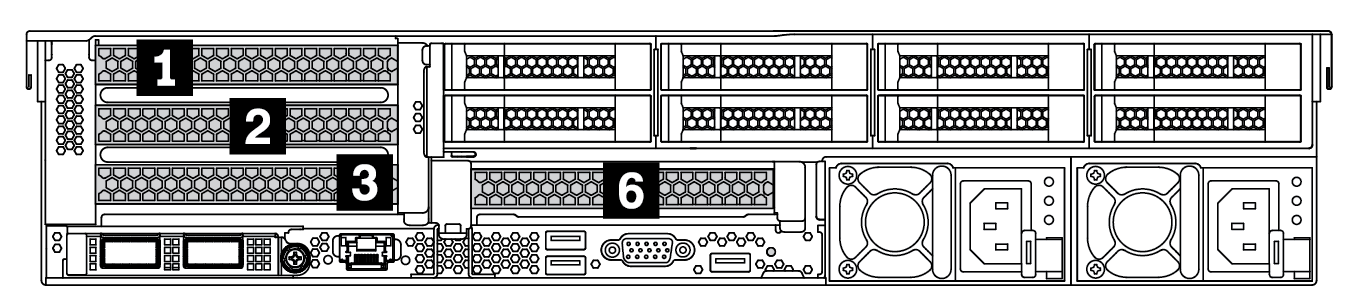

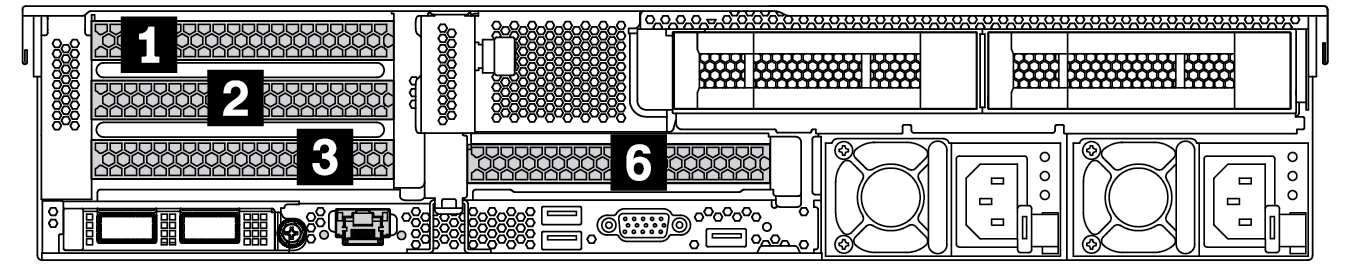

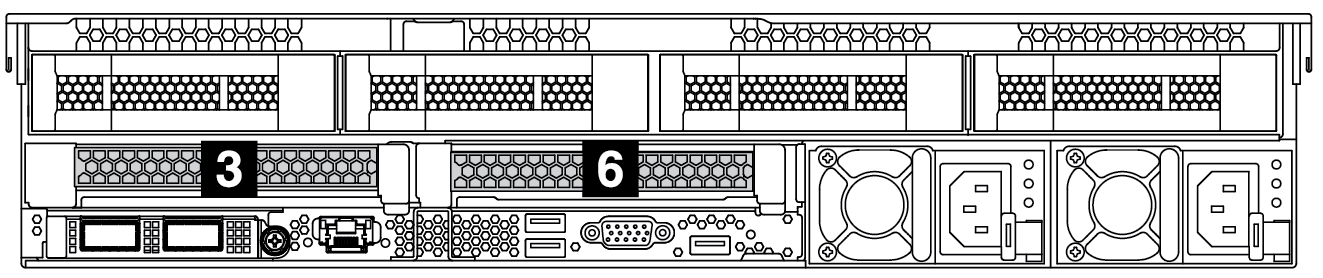

The server supports up to 8 PCIe slots on the rear. The PCIe slot configurations vary by server model.

For AMD EPYC 7002 series processors: Enable/Disable Onboard Device

For AMD EPYC 7003 series processors: Enable/Disable Onboard Device

| Server rear view | PCIe slots | ||

|---|---|---|---|

| Slots 1–3 on riser 1:

| Slots 4–6 on riser 2:

| Slots 7–8 on riser 3:

|

| Slots 1–3 on riser 1:

| Slots 4–6 on riser 2:

| NA |

| Slots 1–3 on riser 1:

| Slot 6 on riser 2: x16 | NA |

| Slots 1–3 on riser 1:

| Slot 6 on riser 2: x16 | NA |

| Slot 3 on riser 1: x16 | Slot 6 on riser 2: x16 | NA |

PCIe adapter and slot priority

The following table lists the recommended physical slot sequence for common PCIe adapters.

| PCIe adapters | Max. qty. | Slot priority | |

|---|---|---|---|

GPU | Single-wide LPHL (40W/75W) | 8 |

|

Single-wide FHFL (150W) | 3 |

| |

Double-wide FHFL (250W/300W) | 3 |

Note To install a double-wide GPU, one of the riser cages is required:

| |

NIC | Xilinx Alveo U25 FPGA | 2 |

|

Xilinx Alveo U50 FPGA | 6 |

| |

Broadcom 57508 100GbE 2-port | 6 |

Note The | |

Broadcom 57454 10/25GbE SFP28 4-port PCIe Ethernet Adapter_Refresh (V2) | |||

Broadcom 57504 10/25GbE SFP28 4-port | |||

Mellanox ConnectX-6 HDR100 IB/100GbE VPI 1-port | |||

Mellanox ConnectX-6 HDR100 IB/100GbE VPI 2-port | |||

Mellanox ConnectX-6 HDR100 IB/200GbE VPI 2-port | |||

Mellanox ConnectX-6 Dx 100GbE 2-port | |||

Intel E810-DA4 10/25GbE SFP28 4-port | |||

NVIDIA ConnectX-7 NDR400 OSFP 1-port PCIe Gen5 Adapter | |||

NVIDIA ConnectX-7 NDR200/200GbE QSFP112 2-port PCIe Gen5 x16 InfiniBand Adapter | |||

Others | 8 |

Note The | |

Internal SFF RAID/HBA | 430-8i/4350-8i HBA | 4 |

|

530-8i/5350-8i/540-8i/930-8i/940-8i RAID | |||

430-16i/4350-16i/440-16i HBA | 1 |

| |

530-16i/540–16i/930-16i/930–24i/940-16i/940-32i RAID | |||

9350-8i | 4 |

| |

9350-16i | 1 | ||

Internal CFF RAID/HBA/RAID expander | 1 | Front chassis | |

External RAID/HBA | 930-8e/940–8e RAID | 4 | NA |

Others | 8 | ||

NVMe Switch/retimer | NVMe switch | 4 |

|

| Retimer card | 4 |

Note CM6-V, CM6-R and CM5-V NVMe drives are not supported when a system is configured with Retimer cards. | |

FC HBA | 8 |

Note For more information about supported FC HBA adapters, see | |

PCIe SSD | 8 |

| |

7mm drive cage | 1 | Slot 3 or 6 | |

Serial port module | 1 | Slot 3 or 6 | |

OCP 3.0 | 1 | OCP slot | |

RAID rules

Gen3 and Gen4 cannot be installed together on one riser card.

RAID controllers of the same PCIe generation group can be installed on one riser card.

RAID and HBA controllers can be installed on one riser card.

The RAID/HBA 4350/5350/9350 adapters cannot be mixed with the following adapters in the same system:

RAID/HBA 430/530/930 adapters

RAID/HBA 440/940/540 adapters, except for external RAID/HBA 440-8e/440-16e/940-8e adapters

PCIe SSD does not support RAID function.

The RAID 940-8i or RAID 940-16i adapter supports Tri-mode. When Tri-mode is enabled, the server supports SAS, SATA and U.3 NVMe drives at the same time. NVMe drives are connected via a PCIe x1 link to the controller.

NoteTo support Tri-mode with U.3 NVMe drives,U.3 x1 mode must be enabled for the selected drive slots on the backplane through the XCC Web GUI. Otherwise, the U.3 NVMe drives cannot be detected. For more information, see U.3 NMVe drive can be detected in NVMe connection, but cannot be detected in Tri-mode. The HBA 430/440 adapters do not support the Self-Encrypting Drive (SED) management feature.

930/9350/940 RAID controllers require a super capacitor.

Oversubscription occurs when the system supports 32 NVMe drives using NVMe switch adapters. For details, see NVMe drive support.