PCIe slots and PCIe adapters

This topic provides installation rules for PCIe adapters.

Slot configurations

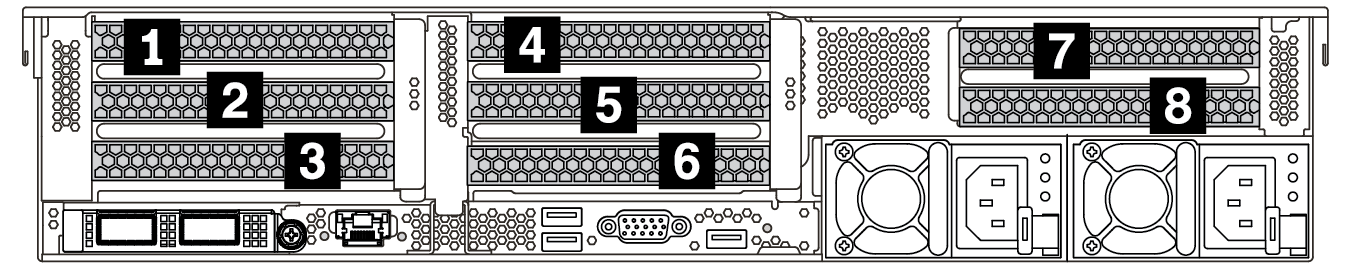

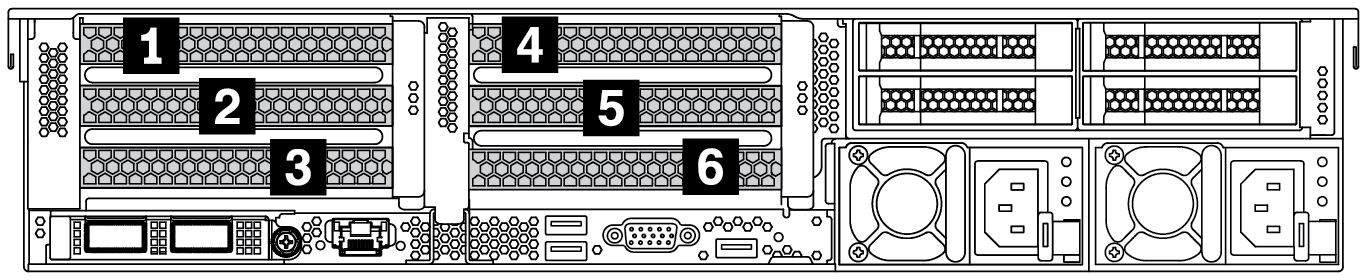

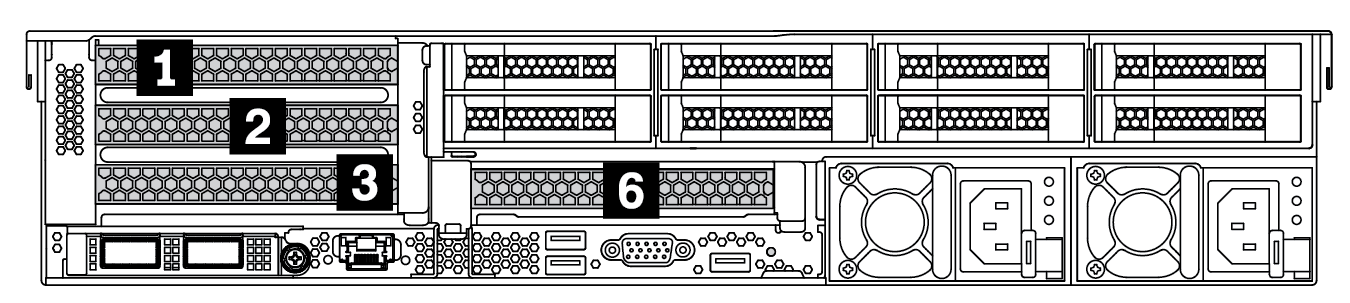

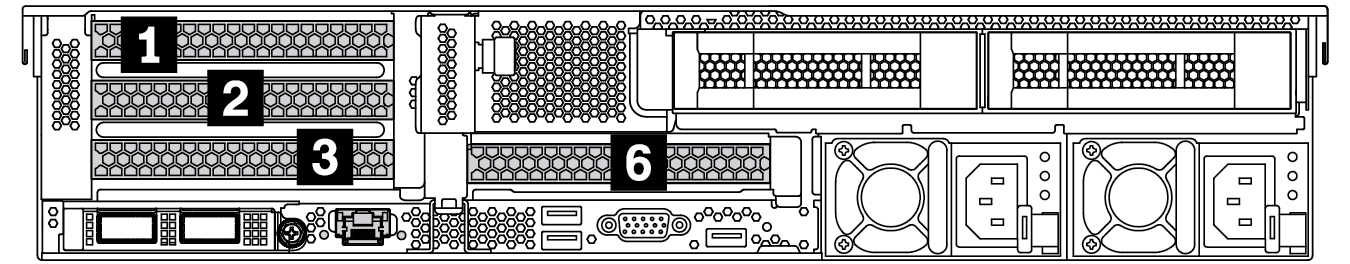

Your server supports the following rear configurations with different types of riser cards.

When only one processor is installed, the server supports riser 1 and riser 3. If the 12 x 3.5-inch AnyBay Expander backplane is installed, then riser 3 is not supported.

When two processors are installed, the server supports riser 1, riser 2, and riser 3. Riser 1 must be selected, then you can select riser 2 or riser 3.

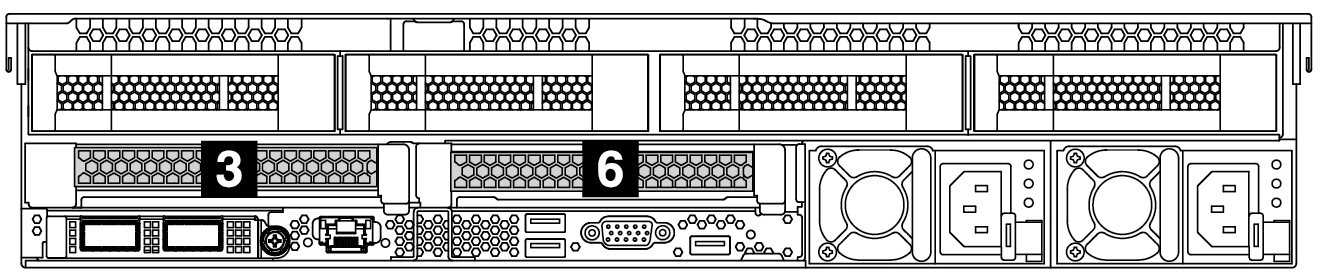

| Server rear view | PCIe slots | ||

|---|---|---|---|

| Slots 1–3 on riser 1:

| Slots 4–6 on riser 2:

| Slots 7–8 on riser 3:

|

| Slots 1–3 on riser 1:

| Slots 4–6 on riser 2:

| NA |

| Slots 1–3 on riser 1:

| Slot 6 on riser 2: x16 | NA |

| Slots 1–3 on riser 1:

| Slot 6 on riser 2: x16 | NA |

| Slot 3 on riser 1: x16 | Slot 6 on riser 2: x16 | NA |

7mm drive cage installation rules:

For server models with 8 PCIe slots or a 4 x 2.5-inch rear drive cage, a 2FH+7mm SSD drive cage can be installed on slot 3 or slot 6, but not both at the same time.

- For server models with an 8 x 2.5-inch/2 x 3.5-inch rear drive cage, one of the 7mm drive cages can be installed:

2FH+7mm SSD drive cage: slot 3

7mm SSD drive cage: slot 6

For server models with a 4 x 3.5-inch rear drive cage or a GPU installed, a low-profile 7mm drive cage can be installed only on slot 6.

Serial port module installation rules:

For server models with 8 PCIe slots or a 4 x 2.5-inch rear drive cage:

If both riser 1 and riser 2 use the x16/x16/E riser card and a 7mm drive cage is installed on slot 6, then a serial port module can be installed on slot 3.

If only one of riser 1 and riser 2 (not both) uses the x16/x16/E riser card, a 7mm drive cage and a serial port module cannot be installed at the same time. If no 7mm drive cage is installed, then a serial port module can be installed on slot 6.

If both riser 1 and riser 2 do not use the x16/x16/E riser card, no serial port module is supported.

For server models with an 8 x 2.5-inch/2 x 3.5-inch rear drive cage:

If riser 1 uses the x16/x16/E riser card, a serial port module can be installed on slot 3 and a 7mm SSD cage can be installed on slot 6.

If riser 1 does not use the x16/x16/E riser card, a 7mm drive cage and a serial port module cannot be installed at the same time. If no 7mm drive cage is installed, then a serial port module can be installed on slot 6.

For server models with a 4 x 3.5-inch rear drive cage, a 7mm drive cage and a serial port module cannot be installed at the same time. If no 7mm drive cage is installed, then a serial port module can be installed on slot 3 or slot 6.

For server models with a double-wide GPU, the serial port module can be installed only on slot 6.

Supported PCIe adapters and slot priorities

The following table lists the recommended slot installation priority for common PCIe adapters.

| PCIe adapter | Maximum supported | Suggested slot priority |

|---|---|---|

| GPU adapternote | ||

| Double-wide GPU (V100S, A100, A40, A30, A6000, A16, A800, H100, L40) | 3 |

|

| Double-wide GPU (AMD MI210) | 2 |

|

| Single-wide GPU (P620, T4, A4, A2, L4) | 8 |

|

| Single-wide GPU (A10) | 4 |

|

| NVMe switch cardnote | ||

ThinkSystem 1611-8P PCIe Gen4 Switch Adapter | 4 | 2 CPUs: 1, 2, 4, 5 |

| PCIe retimer card | ||

ThinkSystem x16 Gen 4.0 Re-timer adapter | 3 |

|

| Internal CFF RAID/HBA/Expander | ||

5350-8i, 9350-8i, 9350-16i | 1 | Not installed in PCIe slots. CFF RAID/HBA adapters are supported only in the 2.5-inch drive bay chassis. |

440-16i, 940-16i | ||

ThinkSystem 48 port 12Gb Internal Expander | ||

| Internal SFF RAID/HBA adapter note | ||

9350-8i | 4 |

|

9350-16i | 2 | |

430-8i, 4350-8i, 530-8i, 5350-8i, 930-8i | 4 |

|

430-16i, 4350-16i, 530-16i, 930-16i | 2 | |

440-8i, 540-8i, 540-16i, 940-8i, 940-16i (8GB) | 4 | |

440-16i, 940-16i (4GB) | 2 | |

930-24i, 940-32i | 1 | |

940-8i (Tri-mode) | 3 | |

940-16i 4GB (Tri-mode) | 2 | |

940-16i 8GB (Tri-mode) | 4 | |

| External RAID/HBA adapter | ||

430-8e, 430-16e, 440-16e | 8 |

|

930-8e, 940-8e | 4 | |

| PCIe SSD adapter | ||

| All supported PCIe SSD adapters | 8 |

|

| FC HBA adapter | ||

| All supported FC HBA adapters | 8 |

|

| NIC adapter | ||

ThinkSystem NVIDIA BlueField-2 25GbE SFP56 2-Port PCIe Ethernet DPU w/BMC & Crypto | 1 |

|

Mellanox ConnectX-6 Lx 100GbE QSFP28 2-port PCIe Ethernet Adapter Broadcom 57508 100GbE QSFP56 2-port PCIe 4 Ethernet Adapter Broadcom 57508 100GbE QSFP56 2-port PCIe 4 Ethernet Adapter v2 | 6 |

|

Broadcom 57454 10/25GbE SFP28 4-port PCIe Ethernet Adapter_Refresh ThinkSystem Intel E810-DA4 10/25GbE SFP28 4-port PCIe Ethernet Adapter ThinkSystem Broadcom 57504 10/25GbE SFP28 4-port PCIe Ethernet Adapter | 6 |

|

| Xilinx Alveo U50note | 6 |

|

| All other supported NIC adapters | 8 |

|

| InfiniBand adapter | ||

Mellanox ConnectX-6 HDR100 IB/100GbE VPI 1-port x16 PCIe 3.0 HCA w/ Tall Bracket | 6 |

|

Mellanox ConnectX-6 HDR100 IB/100GbE VPI 2-port x16 PCIe 3.0 HCA w/ Tall Bracket | ||

ThinkSystem NVIDIA ConnectX-7 NDR400 OSFP 1-Port PCIe Gen5 Adapter | ||

ThinkSystem NVIDIA ConnectX-7 NDR200/HDR QSFP112 2-Port PCIe Gen5 x16 InfiniBand Adapter note | ||

Mellanox ConnectX-6 HDR IB/200GbE Single Port x16 PCIe Adapter w/ Tall Bracket | 6 | Refer to below note for detailed installation rules. |

Mellanox HDR Auxiliary x16 PCIe 3.0 Connection Card Kit | 3 | |

Rules for GPU adapters:

All installed GPU adapters must be identical.

If a double-wide GPU adapter is installed in slot 5, 7 or 2, the adjacent slot 4, 8, or 1 respectively is not available.

If a single-wide 150W GPU adapter is installed on PCIe slot 1, 4, or 7, the adjacent slot 2, 5, or 8 respectively cannot be installed with an Ethernet adapter of 100GbE or higher.

For thermal rules for supported GPUs, see Thermal rules.

Oversubscription occurs when the system supports 32 NVMe drives using NVMe switch adapters. For details, see https://lenovopress.lenovo.com/lp1392-thinksystem-sr650-v2-server#nvme-drive-support.

- Rules for the internal standard form factor (SFF) RAID/HBA adapters:

RAID 930/940 series or 9350 series adapters require a RAID flash power module.

Mixing of RAID/HBA 430/530/930 adapters (Gen 3) and RAID/HBA 440/940 adapters (Gen 4) in the same system is not allowed.

RAID/HBA adapters belonged to the same generation (Gen 3 or Gen 4) are allowed to be mixed in the same system.

The RAID/HBA 4350/5350/9350 adapters cannot be mixed with the following adapters in the same system:

RAID/HBA 430/530/930 adapters

RAID/HBA 440/540/940 adapters, except for external RAID/HBA 440-8e/440-16e/940-8e adapters

The RAID 940-8i or RAID 940-16i adapter supports Tri-mode. When Tri-mode is enabled, the server supports SAS, SATA and U.3 NVMe drives at the same time. NVMe drives are connected via a PCIe x1 link to the controller.

NoteTo support Tri-mode with U.3 NVMe drives,U.3 x1 mode must be enabled for the selected drive slots on the backplane through the XCC Web GUI. Otherwise, the U.3 NVMe drives cannot be detected. For more information, see U.3 NVMe drive can be detected in NVMe connection, but cannot be detected in Tri-mode. The virtual RAID on CPU (VROC) key and Tri-mode are not supported at the same time.

For more information about controller selection for different server configurations, see Controller selections (2.5-inch chassis) and Controller selections (3.5-inch chassis).

To install the Xilinx Alveo U50 adapter, follow the following rules:

The ambient temperature must be limited to 30°C or lower.

No fan fails.

No VMware operating system is installed.

The Xilinx Alveo U50 adapter is not supported in server models with 24 x 2.5-inch drives or 12 x 3.5-inch drives.

The Xilinx Alveo U50 adapter must be installed with the performance fan.

If one of the below InfiniBand adapters are installed:

Primary adapter: Mellanox ConnectX-6 HDR IB/200GbE Single Port x16 PCIe Adapter, up to 6 adapters, can be installed independently.

Secondary adapter: Mellanox HDR Auxiliary x16 PCIe 3.0 Connection Card Kit, up to 3 adapters, must be installed with the primary adapter.

Adapter selection Adapter Quantity PCIe slot Option 1 Primary adapter 1 1 or 2 Secondary adapter 1 4 or 5 Option 2 Primary adapter 2 1 and 2 Secondary adapter 2 4 and 5 Option 3 Primary adapter 3 1, 2, and 7 Secondary adapter 3 4, 5, and 8 Option 4 Primary adapter only Up to 6 1, 4, 7, 2, 5, 8 AttentionWhen the primary adapter is used with active optical cables (AOC) in the 12 x 3.5-inch or 24 x 2.5-inch configuration, follow Thermal rules and ensure that the ambient temperature must be limited to 30°C or lower. This configuration might lead to high acoustic noise and is recommended to be placed in industrial data center, not office environment.

When both the primary adapter and GPU adapters are used at the same time, follow the thermal rules for GPU adapters. For detailed information, see Server models with GPUs.

- Rules for ThinkSystem NVIDIA ConnectX-7 NDR200/200GbE QSFP112 2-port PCIe Gen5 x16 InfiniBand Adapter:

VMware 7.0 U3 does not support the adapter.

VMware 8.0 U2 in-box supports the adapter.

Windows 2019 & 2022 can support the adapter.

The adapter with active optical cables (AOC) is not supported in the 12 x 3.5-inch or 24 x 2.5-inch configuration with middle drive bays.

- When the adapter installed, AOC is not supported in PCIe slot 3 and slot 6 by the following configuration.

12 x 3.5-inch configuration

24 x 2.5-inch configuration

12 x 3.5-inch configuration with rear drive bays

24 x 2.5-inch configuration with rear drive bays