Installing a DIMM

Use this information to install a DIMM. The upper and lower compute nodes each have their own dedicated DIMMs.

- Read Safety and Installation guidelines.

- Read the documentation that comes with the DIMMs.

- If the Flex System x222 Compute Node is installed in a chassis, remove it (see Removing a compute node from a chassis for instructions).

- Carefully lay the compute node on a flat, static-protective surface, orienting the compute node with the bezel pointing toward the left.

This component can be installed as an optional device or as a CRU. The installation procedure is the same for the optional device and the CRU.

After you install or remove a DIMM in the upper or lower compute node, you must change and save the new configuration information for that compute node by using the Setup utility. When you turn on the compute node where DIMMs have been installed or removed, a message indicates that the memory configuration has changed. Start the Setup utility and select Save Settings (see Using the Setup utility for more information) to save changes.

- Verify that the amount of installed memory is the expected amount of memory through the operating system in the upper or lower compute node where you installed the DIMM, by watching the monitor as the compute node starts, by using the CMM sol command, or through Flex System Manager management software (if installed).

- For more information about the CMM sol command, see the "Flex System Chassis Management Module: Command-Line Interface Reference Guide".

- For more information about Flex System Manager management software, see the "Flex System Manager Software: Installation and Service Guide".

- Run the Setup utility in the upper or lower compute node where you installed the DIMM to reenable the DIMMs (see Using the Setup utility for more information).

The upper and lower compute nodes each have a total of 12 dual inline memory module (DIMM) connectors. The compute node supports low-profile (LP) DDR3 DIMMs with error-correcting code (ECC) in 4 GB, 8 GB, 16 GB, and 32 GB capacities.

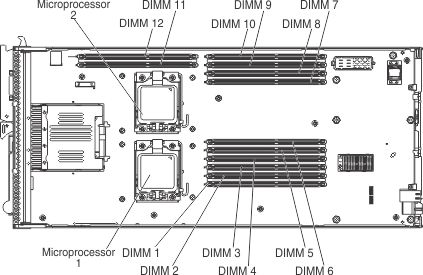

The following illustration shows the DIMM connectors, in the lower compute node.

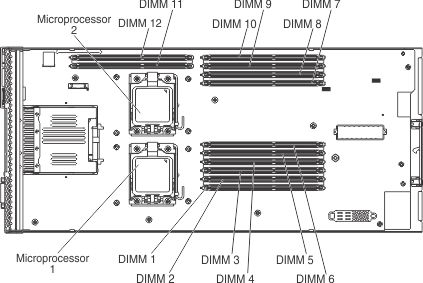

The following illustration shows the DIMM connectors, in the upper compute node.

The memory is accessed internally through six channels, with three channels for each microprocessor. Each channel contains two DIMM connectors. Each channel can have one, two, or four ranks. The following tables list each channel for the upper and lower system boards and show which DIMM connectors are in the channel. Memory channel configuration for the upper and lower system boards is the same.

| Microprocessor | Memory channel | DIMM connectors |

|---|---|---|

| Microprocessor 1 | Channel A | 6 and 5 |

| Channel B | 4 and 3 | |

| Channel C | 2 and 1 | |

| Microprocessor 2 | Channel D | 12 and 11 |

| Channel E | 8 and 7 | |

| Channel F | 10 and 9 |

Depending on the memory mode that is set in the Setup utility, the compute node can support a minimum of 4 GB and a maximum of 384 GB of system memory in each compute node with one microprocessor. If two microprocessors are installed, each compute node can support a minimum of 8 GB and a maximum of 384 GB of system memory.

- You cannot mix RDIMMs and LRDIMMs in the same compute node.

- A total of two ranks on each of the three memory channels is supported.

- Independent-channel mode: Independent-channel mode provides a maximum of 192 GB of usable memory for each compute node with one installed microprocessor, and 384 GB of usable memory for each compute node with two installed microprocessors (using optional 32 GB DIMMs).

- Rank-sparing mode: In rank-sparing mode, one memory DIMM rank serves as a spare of the other ranks on the same channel. The spare rank is held in reserve and is not used as active memory. The spare rank must have identical or larger memory capacity than all the other active DIMM ranks on the same channel. After an error threshold is surpassed, the contents of that rank is copied to the spare rank. The failed rank of DIMMs is taken offline, and the spare rank is put online and used as active memory in place of the failed rank.The following notes describe additional information that you must consider when you select rank-sparing memory mode:

- Rank-sparing on one channel is independent of the sparing on all other channels.

- You can use the Setup utility to determine the status of the DIMM ranks.

- Mirrored-channel mode: In mirrored-channel mode, memory is installed in pairs. Each DIMM in a pair must be identical in size and architecture. The channels are grouped in pairs with each channel receiving the same data. One channel is used as a backup of the other, which provides redundancy. In mirrored-channel mode, only channels B and C are used, with the memory contents on channel B duplicated in channel C (channel A is not used in mirrored-channel mode). When mirroring, the effective memory that is available to the system is only half of what is installed.

| One installed microprocessor | Two installed microprocessors |

|---|---|

| DIMM connectors 6, 4, 2, 5, 3, and 1 | DIMM connectors 6, 12, 4, 8, 2, 10, 5, 11, 3, 7, 1, and 9 |

Install DIMM pairs in order as indicated in the following table for mirrored-channel mode.

| DIMM pair | 1 microprocessor installed | 2 microprocessors installed | DIMMs per channel |

|---|---|---|---|

| 1 | DIMMs 4 and 21 | DIMMs 4 and 21 | 1 |

| 2 | DIMMs 3 and 11 | DIMMs 8 and 101 | |

| 3 | none | DIMMs 3 and 11 | |

| 4 | none | DIMMs 7 and 91 | |

| |||

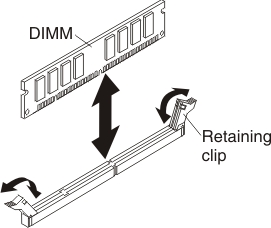

To install a DIMM, complete the following steps:

- Install the upper compute node (see Installing the upper compute node for instructions).

- Install the Flex System x222 Compute Node into the chassis (see Installing a compute node in a chassis for instructions).